\n The \"status\" tag describes the state of completion: whether it’s a pile of links & snippets & \"notes\", or whether it is a \"draft\" which at least has some structure and conveys a coherent thesis, or it’s a well-developed draft which could be described as \"in progress\", and finally when a page is done—in lieu of additional material turning up—it is simply \"finished\".

\n \n

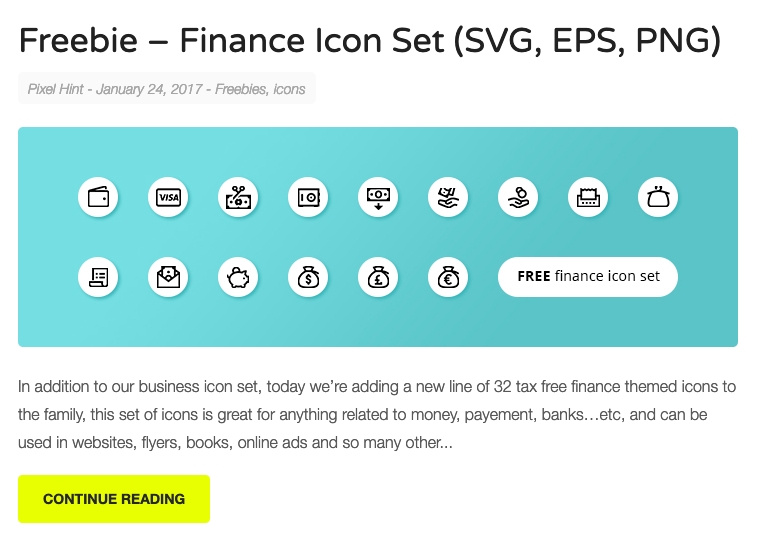

\n\nWith these two ideas together, I finally had a concept (shitty first drafts) and an implementation (status tags) to publish drafts in my blog. Even though I still don’t want to put shitty first drafts out there, I’m okay with putting good second ones. This concept gives me more freedom on making progress in my content and less pressure to put it out there. Also, it’s open to feedback in an early state.\n\nI’ll try to limit myself from keeping the blog full of drafts. Maybe I’ll keep it at three-four drafts maximum. Also, I want to keep track of the changes, so I’ll somehow keep a changelog per post to document the progress.\n\nThis is my latest attempt to publish more content mostly for self-documentation and journal my experiences. Instead of doing short rants on social media, I expect to put my thoughts in a place I own."},{"slug":"accessible-read-more-links","published":true,"title":"Accessible \"Read More\" links","excerpt":"A pattern you probably have seen in various blogs is the “Read more” link. The design usually has the title first, then a small excerpt of the content and a “Read more” text link to the full post. ","date":"2019-06-18","status":"Draft","author":{"name":"Juan Olvera"},"ogImage":{"url":"/static/site-feature.png"},"changeLog":{"Thu Jun 13 2019 00:00:00 GMT+0000 (Coordinated Universal Time)":"First draft"},"content":"\n\"Learn More\" Links are part of a pattern we see in Blogs. The design has the title first, then a content excerpt and a text link that takes us to the post page with the full content.\n\n\n\nBlogs have an index page with a list of posts that makes it easier for the general public to choose what to read. This is simple enough the general public, but it gets complicated with people with disabilities. In this specific case, people that use screen readers.\n\nThe fix for this issue is so simple, yet I see it everywhere, on big company websites or simple personal blogs.\n\nBefore I started writing this post, I checked ten blogs—some of them with an excellent reputation—and eight had an inaccessible “Read more” link.\n\nHow can we know if a “Read more” link is inaccessible?\n\n## Screen reader usage\n\nScreen readers generate a list of links without context to help the user map the content of the page. Also, screen reader users usually navigate a page by using the tab key to jump from link to link without reading the content. So you can expect that having a list of “Read more” links is useless and adds redundancy in this context, e.g.,\n\n```markdown\n|- Title for a blog post\n|- Read more\n|- Title for a second blog post\n|- Read more\n|- Third blog post\n|- Read more\n|- Another blog post\n|- Read more\n```\n\n[Also watch “Screen reader demo: don't use 'click here' for links” in YouTube](https://www.youtube.com/watch?v=zGa_rIK1itA)\n\n### Real world examples\n\nLet’s see some examples and inspect the code they use.\n\n#### Example 1\n\n\n\nIn this example, the link is a big button acting as a call to action, but the button by itself is lacking context. Continue reading… what?\n\n```html\n